|

|

|

Our Tech Examples Applications FAQ Index Sales

|

|

Frequently Asked Questions |

Why color machine vision?

Monochrome (black and white or gray scale) machine vision is now well established as a tool for alignment, gauging, and optical character recognition. Color is often useful to simplify a monochrome problem by improving contrast or separation. However, the greatest potential uses of color vision, human or machine, are for cost-effective object identification, classification and assembly inspection. For a few of the many applications.What do we mean by color?

Color is a visual perception that results from the combined output of sets of retinal cones, each sensitive to different portions of the visible part of the electromagnetic spectrum. Most humans have three sets of cones with peak sensitivities in the red, green and blue portions of the spectrum respectively. A few women, and many birds, have four sets of cones, while anyone who is "color blind" and will have fewer than three. Any perceived color may usually be created by a variety of sets of "primary" colors when combined in the correct proportions. Most, but not all, color image capture devices such as cameras and scanners, and color monitors used with machine vision use three color components, red, green and blue. We often refer to the combined values of these components, as stored in a device or on digital media, as color. We, at WAY-2C, have developed and recently patented a method to determine optimum spectral component combinations for differentiating any given set of classes.What is a color space?

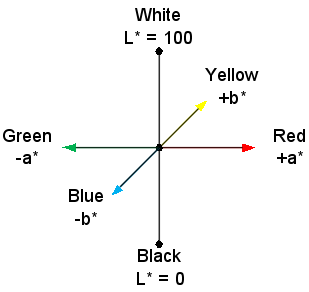

A color space is a means of representing the three components of a color in terms of a position in a (usually) three dimensional space. RGB, HSI, LAB and CIE are some of the many color spaces which may be used, depending on the particular purpose of the analysis. A discussion of color spaces for video and computer graphics may be found on Wikipedia as well as many other fine sites.What is RGB color space?

What is HSI color space?

What is L*a*b* color space?

I've been told that it is very important to work in HSI space rather than RGB space. Why does it matter what color space is used?

Many traditional applications of color machine vision are aimed at differentiating single color objects from the background for alignment and gauging purposes. As long as the colors in the image are reasonably well saturated (vivid), hue will tend to remain relatively constant in the presence of shadows and other lighting variations. In such cases an image based on hue alone may work better with standard alignment and gauging tools than traditional gray scale analysis.Unfortunately, when colors have low saturation (lie near the black-gray-white axis) hue may be difficult to determine accurately; when saturation is zero, hue is undefined. For systems which must be able to differentiate all colors, saturated and unsaturated, HSI representation can introduce significant problems. We have found that for general identification of objects which may be multicolored, contrary to conventional wisdom, the disadvantages of HSI space will almost always outweigh any possible advantages.

Which color space does WAY-2C use?

What is supervised classification?

Supervised classification is classification in which a system, living or machine, learns to recognize objects or situations by the example of a trainer who knows the "correct answers". In contrast, unsupervised training involves the person or machine learning on their own without guidance from a trainer.What is information theory?

Information (or communication) theory is based on the pioneering work (1) of Claude Shannon of Bell Labs and MIT during the 1940's. It is concerned with understanding, measuring, and optimizing the efficiency of information transfer. As such it deals with non-parametric probability distributions of random events such as letters in text or colors in an image. Information theory is central not only to modern communication technology, but also to the understanding of natural languages, and the communication of information by other natural information processing systems. Organisms which transfer information with less than optimum efficiency from sense organs such as the eyes to the portions of the brain which must take appropriate action may have a significantly lowered chance of long term survival.What is Minimum Description Classification?

Minimum description is an information theory based approach to supervised pattern recognition which handles complex data distributions well. It provides a unifying framework for data analysis based on minimizing the amount of information necessary to describe a set of observations (2). By comparing probability distributions, minimum description analysis provides maximum likelihood results while remaining consistent with the time honored philosophy of the simplest explanation being the best.Does WAY-2C have an interface for OpenCV functions?

Yes.

Does WAY-2C use OpenCV functions for identification?

No. It uses a different set of functions with algorithms specially designed for

rapid, robust classification and anomaly detection in color and similar images.

Based on insights from their information theoretic foundation, these are typically

very much faster and more robust than those offered by OpenCV.

At present the latter are used only sparingly for blob related operations.

Is WAY-2C only for color-based identification?

No. Aren't convolutional neural nets and deep learning based artificial

intelligence already surpassing the classification methods used by WAY-2C ?

No. Not at all! It's true that such techniques can be very helpful in identification where an undetermined mixture of different attributes may be required. However, this is often at a high compute cost, and needs large training sets, considerable expertise and retraining each time a new combination of classes of interest is chosen. Moreover, deep neural net systems can be shown to be easily fooled.

In contrast,

Can WAY-2C generate gray-scale images for use by traditional machine vision tools?

Yes.Does WAY-2C offer fill-in filtering?

No. Some of our competitors regard color variability, hot spots, shadows etc.

as complexities needing fill-in filtering to eliminate.

This may have some truth if the objective is to measure the color of

an item. However it is certainly not true if the objective is to

identify items based on their color distributions.

In general

filtering has the potential to remove distinguishing information

and reduce the likelihood of delivering the most accurate color-based

identification. By efficiently using the whole unfiltered color

distribution for each class of interest Do single-chip camera color filter arrays cause problems?

We've never seen any. WAY-2C's mission is color-based identification, not color measurement. Identification is based on comparison of complete test and reference color frequency distributions rather than individual pixel values. As long as the distributions are obtained using identical camera and lighting setups, any noise introduced by the filter array usually has little effect.Does WAY-2C use correlation for distribution matching?

No. Conventional (Pearson) correlation is inconsistent with the maximum likelihood methodology.

I've heard a lot about the need for complex and specialized algorithms for color based identification. Don't I need very powerful computers or special expertise for this type of application?

|

Our experience shows that, unlike CNN's and deep learning, most practical color-based classifications can be performed with our simple, consistent, minimum description approach and are easily handled by modern personal computers. After all, birds, bees and other creatures with small brains and little formal mathematical training are adept at recognizing objects based on color distributions. |

|

Can WAY-2C recognize textures?

Since textures in images affect the color distribution statistics, much textural information is automatically

included in all Why does WAY-2C succeed where the competition fails?

All competing systems of which we are aware offer color as an extension to their traditional monochrome

machine vision products. In contrast, Many traditional color machine vision systems that offer statistical matching analyze the three color components separately. However both common sense and a rigorous analysis show that this approach throws away critical color information thus dramatically decreasing the number of color patterns which can be distinguished and increasing the probability of errors.

Also, traditional statistical matching methods for color machine vision are based on simplifying assumptions about the nature of the color distribution. These assumptions are valid in only a tiny fraction of the potential applications of color machine vision; when they are invalid the classification methods based on them are more likely to produce the wrong answers.

By analyzing all the color components simultaneously, and using a more appropriate matching criterion

based on rigorous statistical analysis and information theory,

If you are disappointed with the performance of your present color machine vision system, and are serious

about wanting to improve it, contact us. We'll give

you an honest, no obligation, appraisal of whether

Why don't other machine vision companies use the same methods as WAY-2C?

We can only speculate, but it seems to us that there are several reasons: different origins, lack of familiarity with the methods and cost of new code and interface development. Traditional color machine vision developed naturally and incrementally from monochrome vision along a perfectly sensible path building on knowledge from printing, video camera and video display technologies. The focus was on individual pixels using well established mathematical tools familiar to all engineers. The methods, suitable for measurements characterized by normal (Gaussian) distributions, work reasonably in situations when color distributions are simple and reasonably Gaussian. Unfortunately, when faced with the need to classify based on complex color distributions the same tools tend to give unreliable results and/or be awkward to use. WAY-2C originated in a need for the military to characterize and understand very small earth motions containing many steps, spikes and discontinuities separated by periods of monotonous drift. These geophysical motion distributions were far from Gaussian so use of the familiar mathematical tools immediately led to wildly erroneous results. After considerable research and some serendipity it was realized that the solution lay in abandoning the use of tools appropriate for Gaussian distributions and concentrating only on those that could work for any data distribution. Applying the appropriate tools, already developed for information theory, resulted in remarkably good identification results, particularly when regions with complex coloration were involved. The result was a patent on the methods. With the relatively low initial demand for color based identification, and the protection provided by the patent allowed us the time to design, develop and optimize the large amount of unique code required. From the time of the patent application until the first customer for WAY-2C appeared we had been able to devote over six years to the project. In contrast, no company already offering traditional color machine vision, would be able to put off a potential customer long enough to develop the code for a new method and a new user interface. Unfortunately, as time has passed, the traditional methods continue to be taught in courses, incorporated in to marketing materials and locked into certifications so many remain unaware and/or suspicious of WAY-2C's far more powerful methods.Does WAY-2C require tedious individual color picking, threshold or range setting?

No. After all, humans and animals don't normally use thresholds for color-based classification.

Why should your color machine vision system? Some old-fashioned systems which rely on

separate analysis of the color components try to classify complex color distributions

by a few manually set thresholds or picked colors. Instead,

In the verification/anomaly detection mode, when only a single reference class is used,

How can I tell whether WAY-2C will work in my application?

Demonstrations, including prototype scripts for new applications, can usually be arranged.

We can also put you in touch with actual users who can share their experiences.

Ask us about your application and/or download

a no obligation free trial copy.

Can I write my own GUI to interface to WAY-2C ?

Yes. Most integrators and OEM's do.

Why do you use that ugly gray background for the WAY-2C website?

For technical reasons, which we won't go into here, images captured from some machine vision video cameras

appear much darker on computer monitors than they do on a normal TV monitor for which such cameras were

originally designed. This is particularly true when viewed against a very light or white background on the

monitor. We could of course lighten the images with almost any image editing software before placing them

on our website. Unfortunately, if we did this, the images we show on the website would no longer represent

undoctored examples of